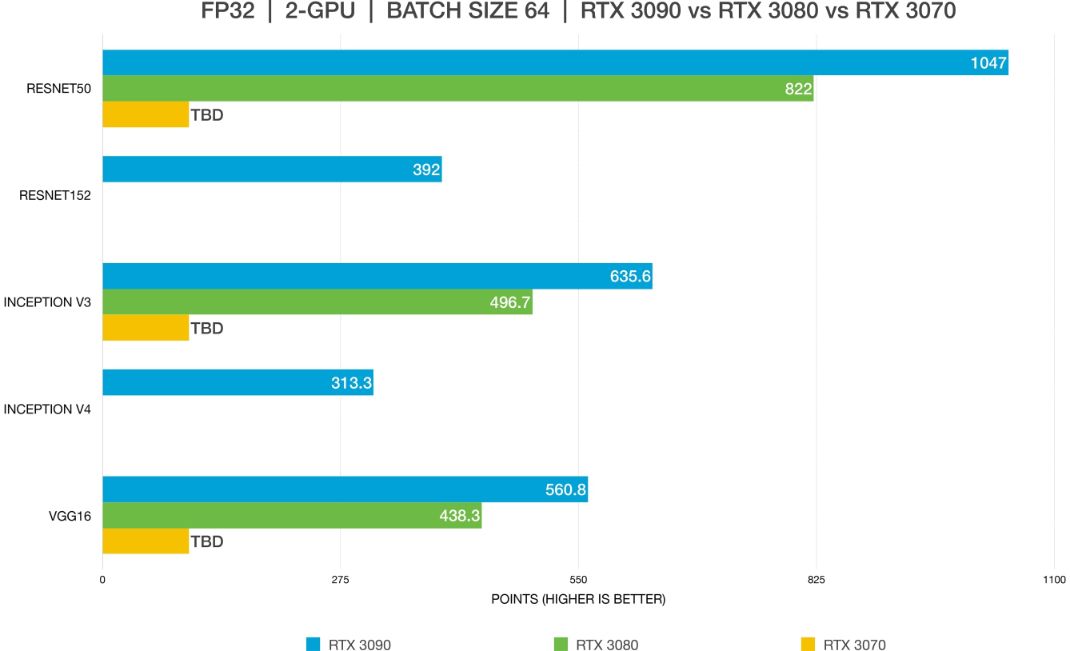

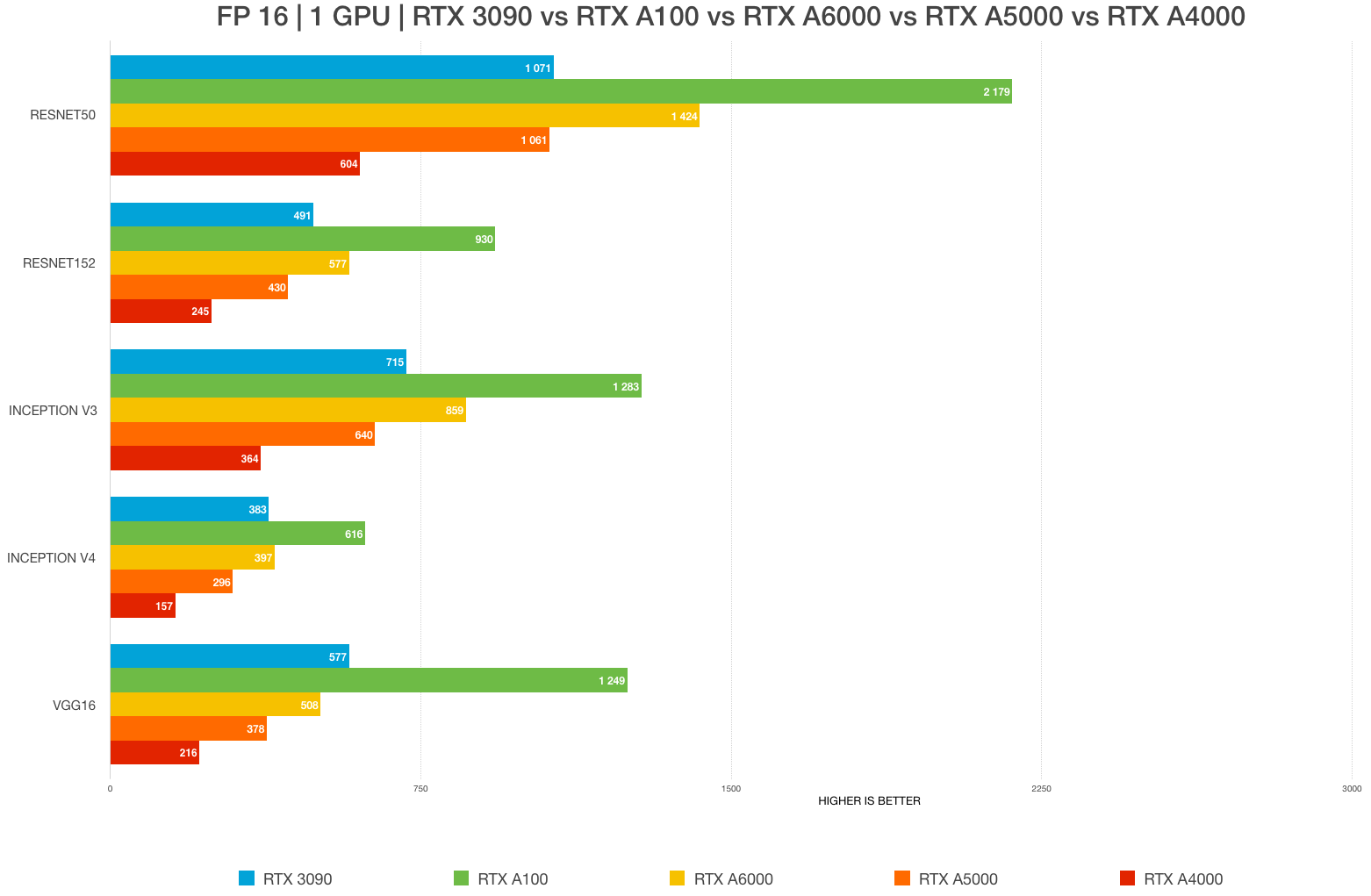

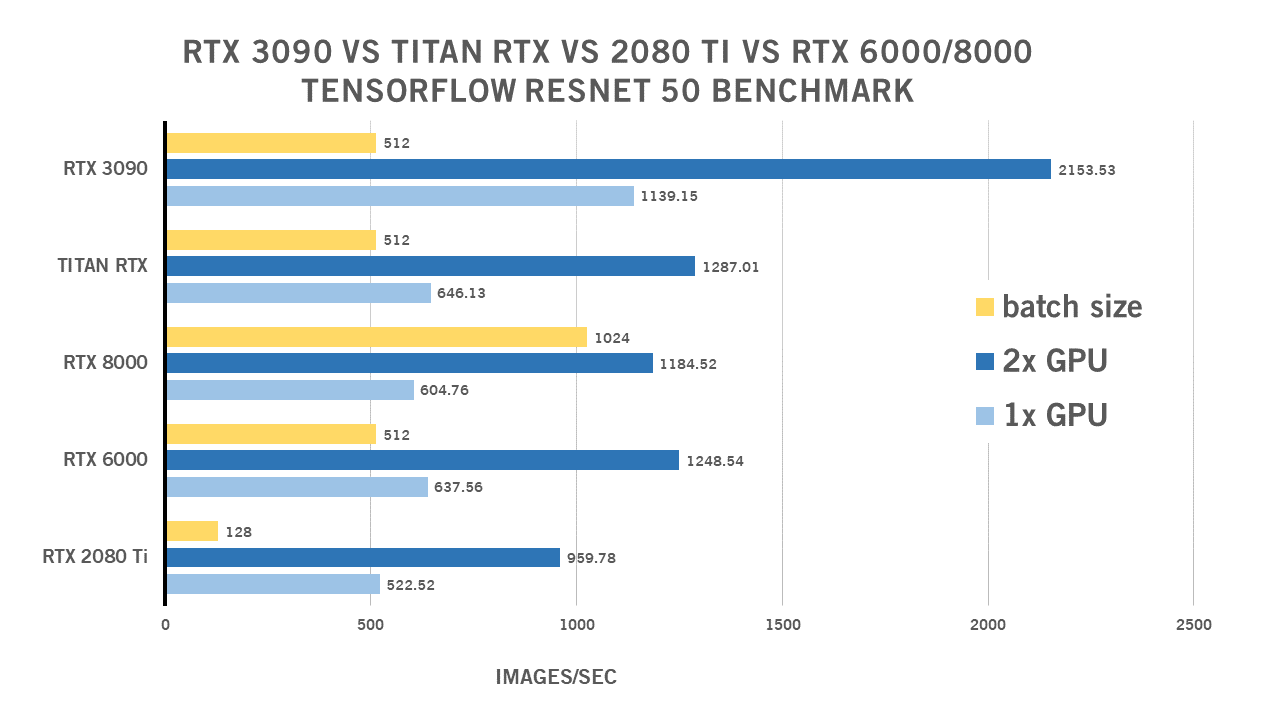

Best GPU for deep learning in 2022: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON Custom Workstation Computers.

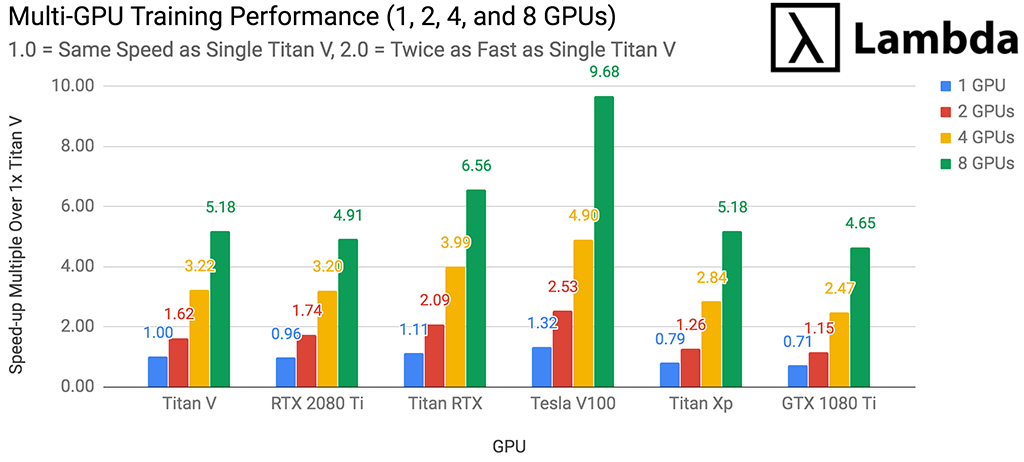

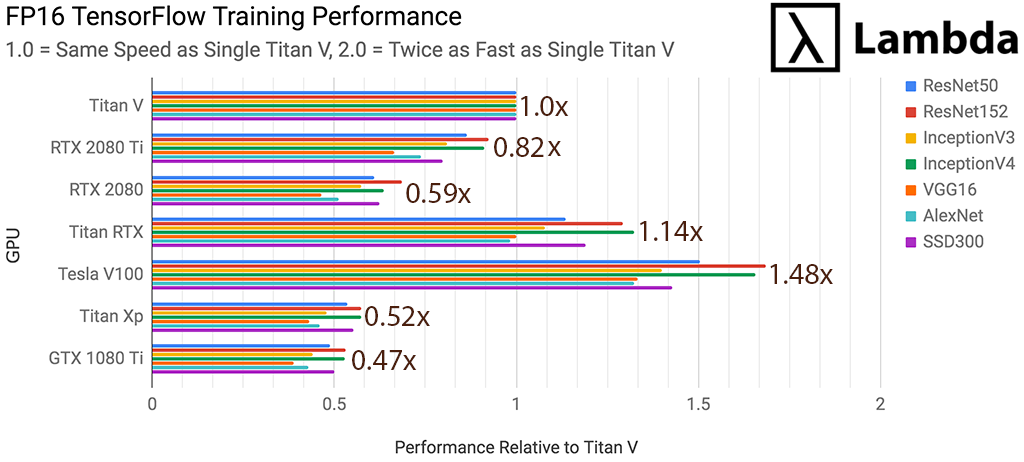

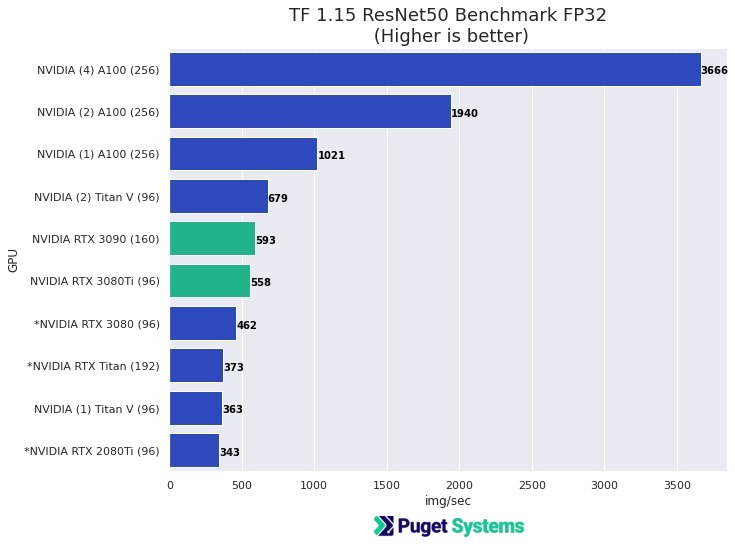

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V | Puget Systems

GitHub - moritzhambach/CPU-vs-GPU-benchmark-on-MNIST: compare training duration of CNN with CPU (i7 8550U) vs GPU (mx150) with CUDA depending on batch size

![D] My experience with running PyTorch on the M1 GPU : r/MachineLearning D] My experience with running PyTorch on the M1 GPU : r/MachineLearning](https://preview.redd.it/p8pbnptklf091.png?width=1035&format=png&auto=webp&s=26bb4a43f433b1cd983bb91c37b601b5b01c0318)