Best GPU for deep learning in 2022: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON Custom Workstation Computers.

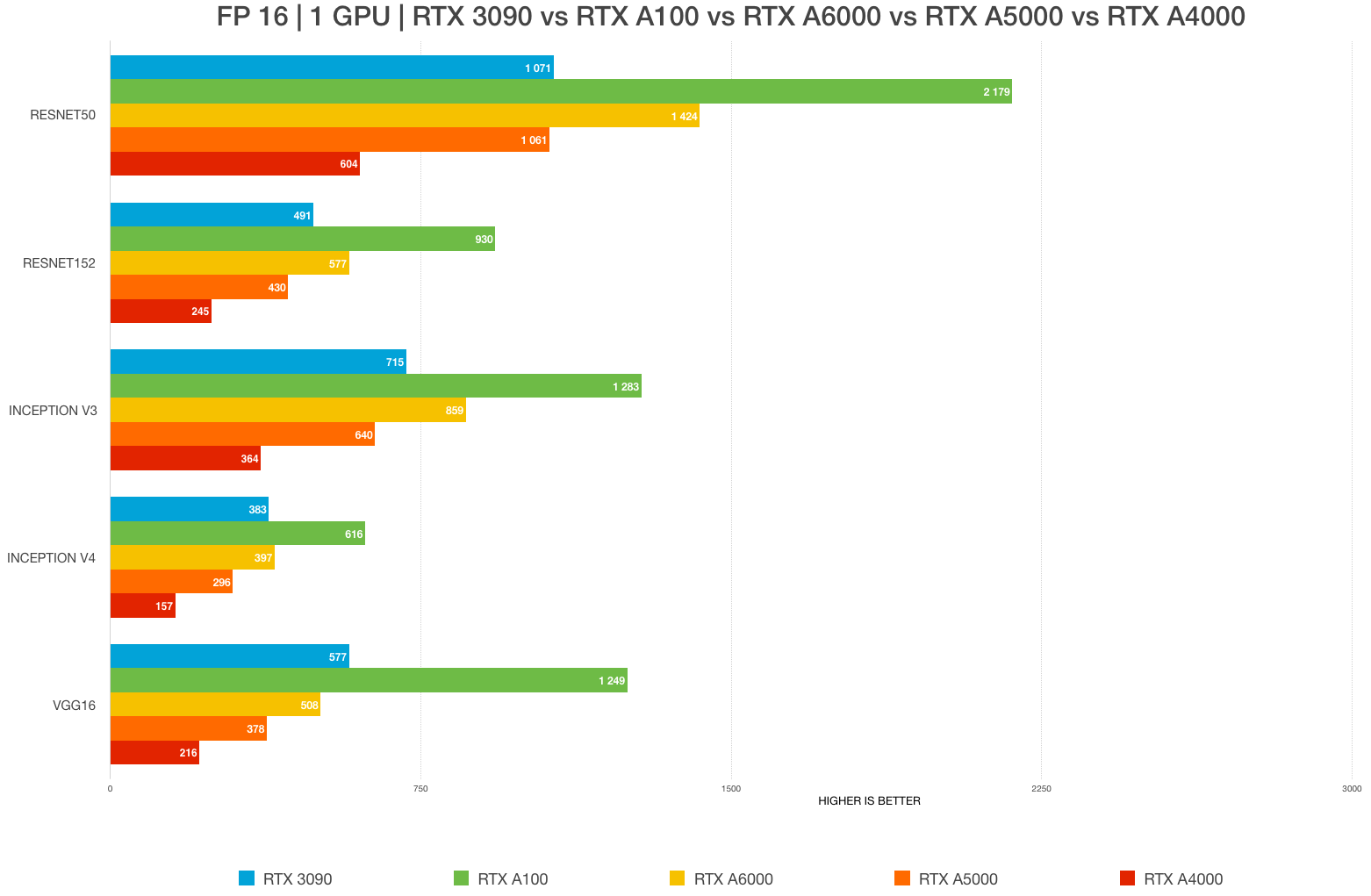

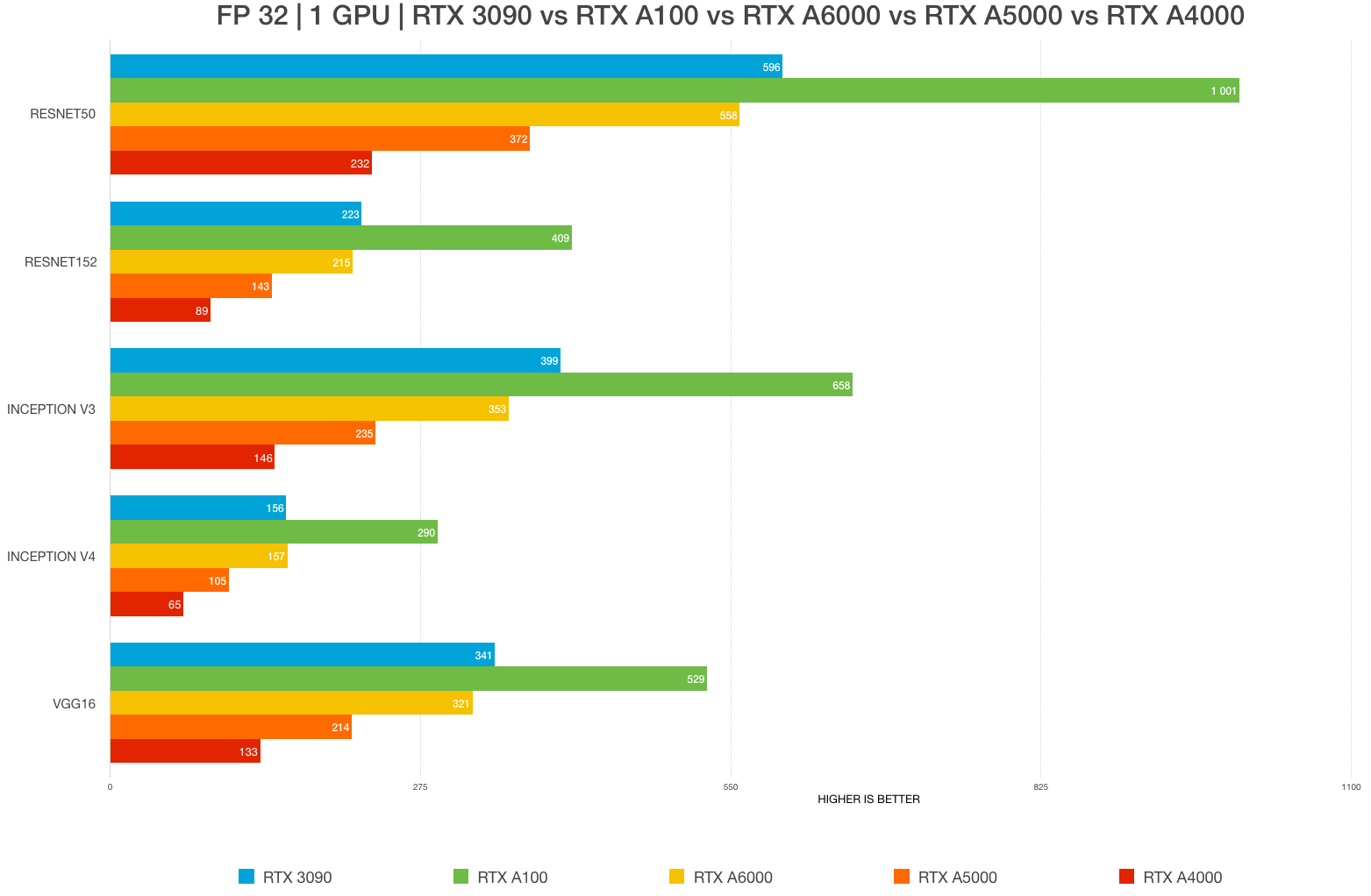

Best GPU for deep learning in 2022: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON Custom Workstation Computers.

Numerical throughput comparison of TMVA-CPU, TMVA-OpenCL, TMVA-CUDA and... | Download Scientific Diagram

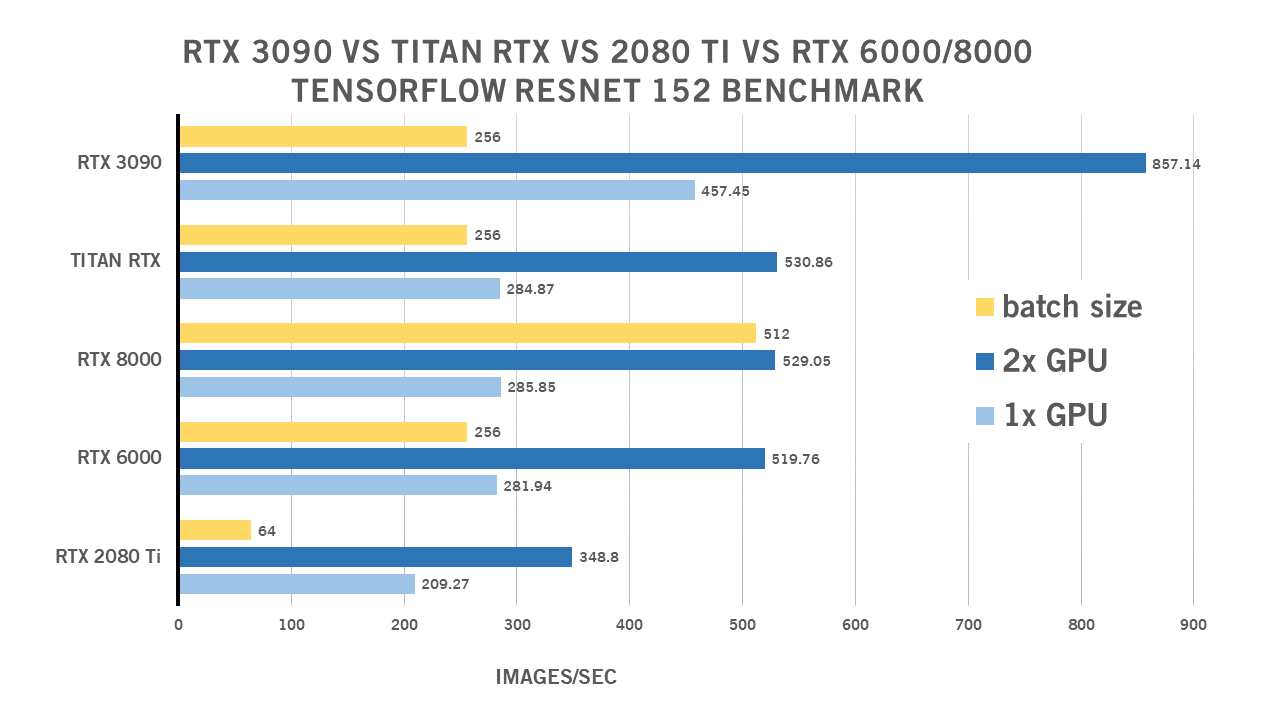

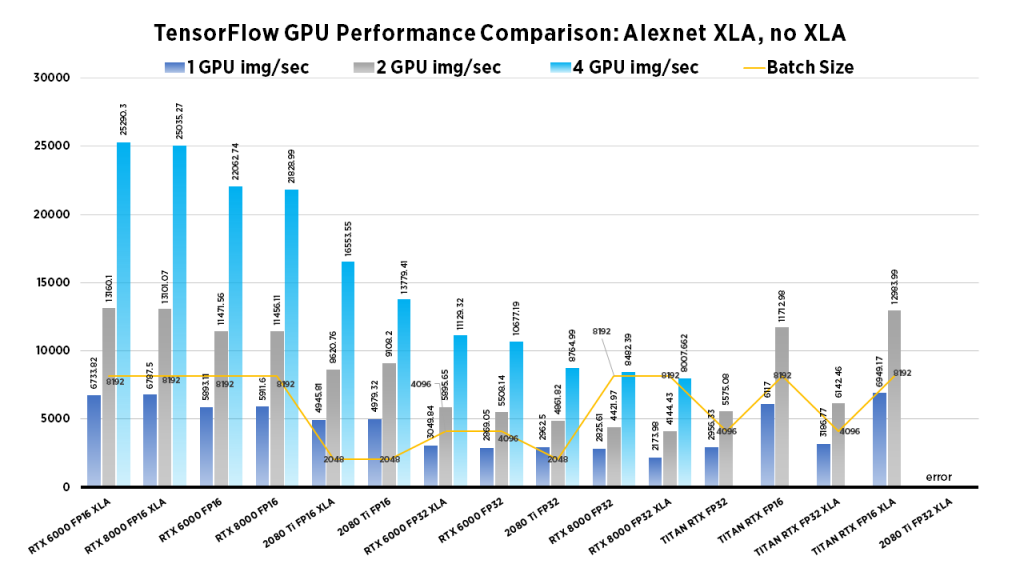

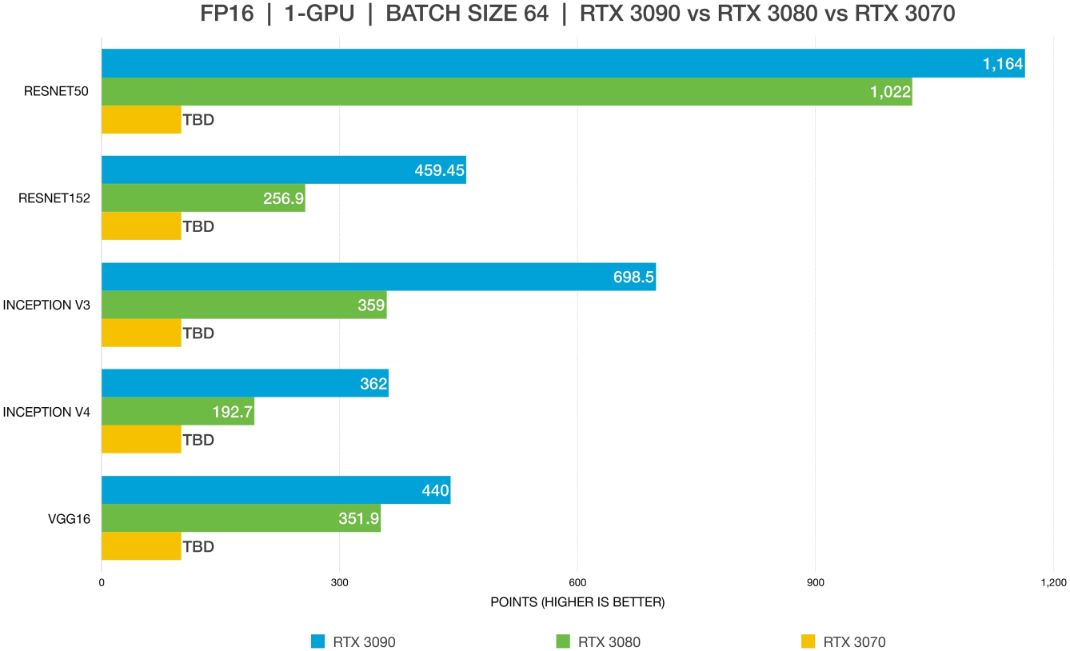

Deep Learning Benchmarks Comparison 2019: RTX 2080 Ti vs. TITAN RTX vs. RTX 6000 vs. RTX 8000 Selecting the Right GPU for your Needs | Exxact Blog

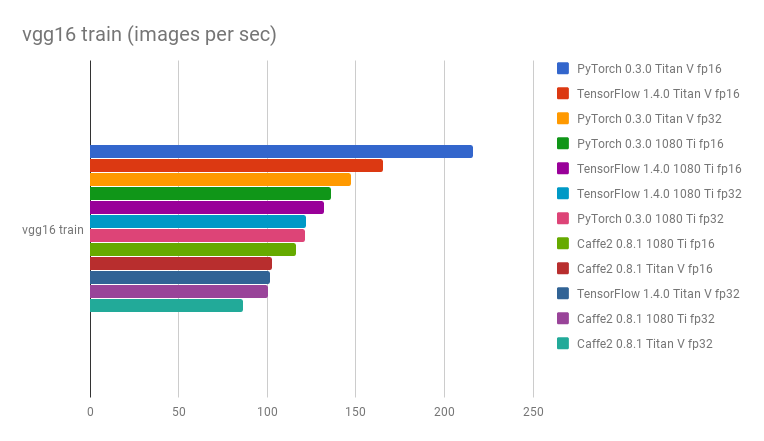

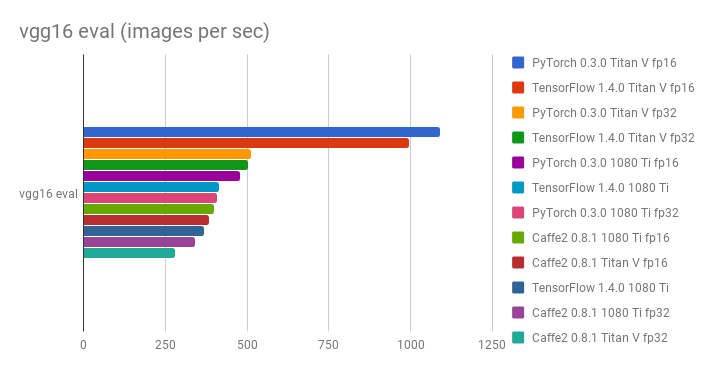

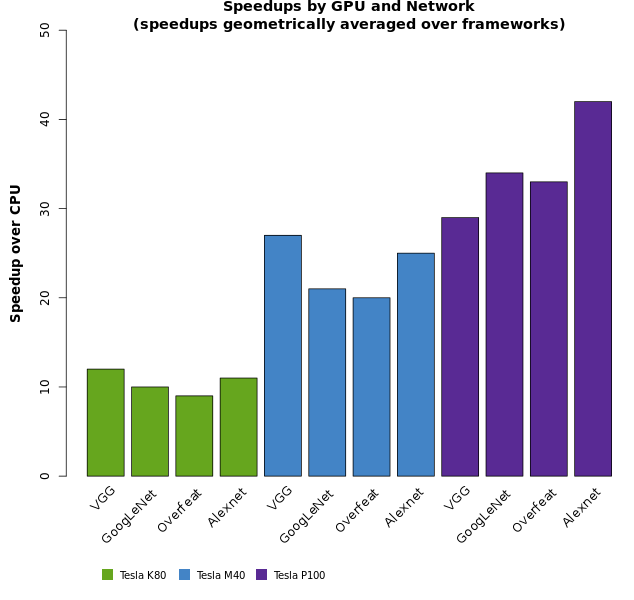

GitHub - u39kun/deep-learning-benchmark: Deep Learning Benchmark for comparing the performance of DL frameworks, GPUs, and single vs half precision

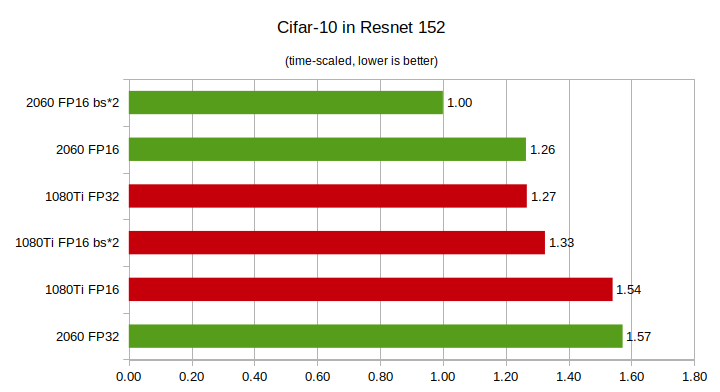

RTX 2060 Vs GTX 1080Ti Deep Learning Benchmarks: Cheapest RTX card Vs Most Expensive GTX card | by Eric Perbos-Brinck | Towards Data Science

![Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE] Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE]](https://i2.wp.com/saitechincorporated.com/wp-content/uploads/2021/06/gpu-performance-in-deep-learning-chart.png?resize=580%2C339&ssl=1)