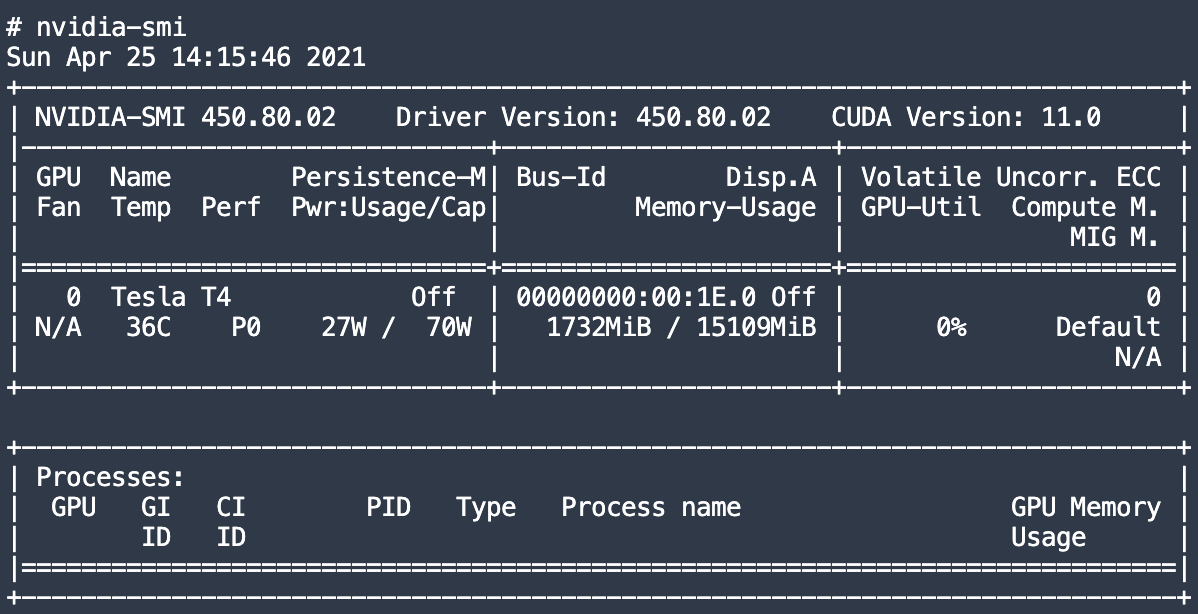

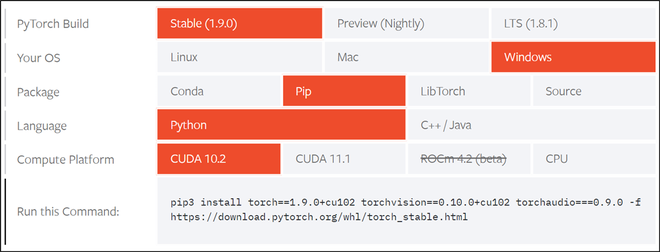

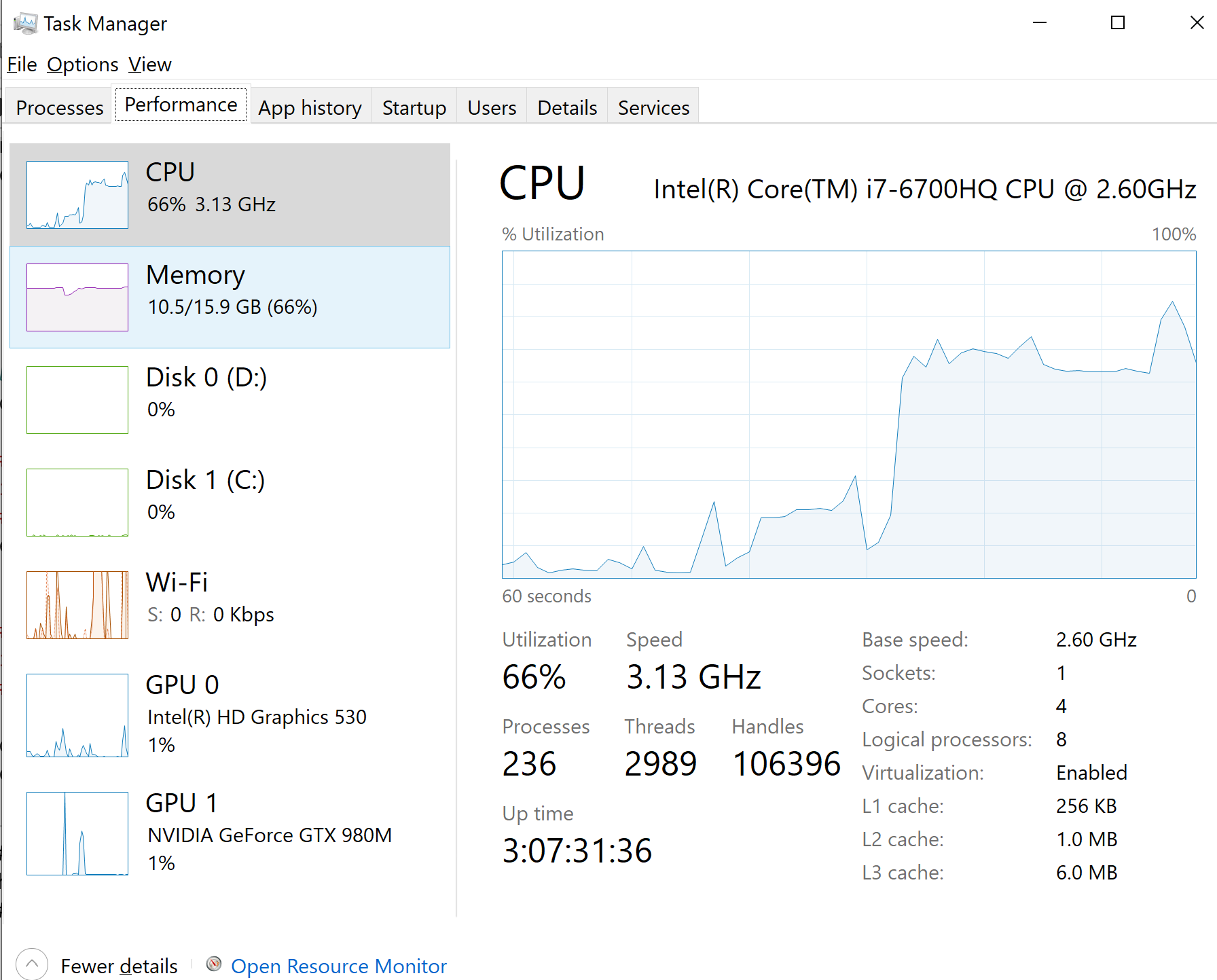

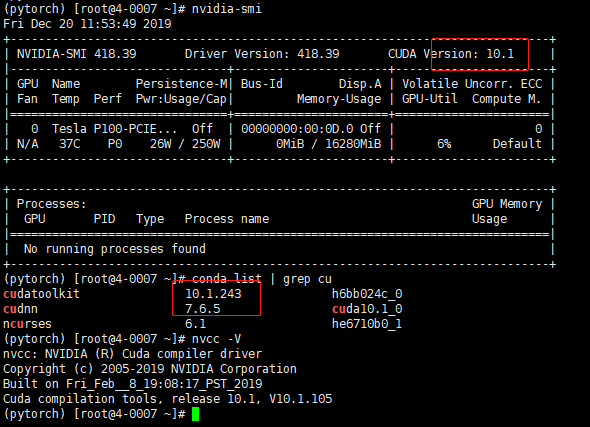

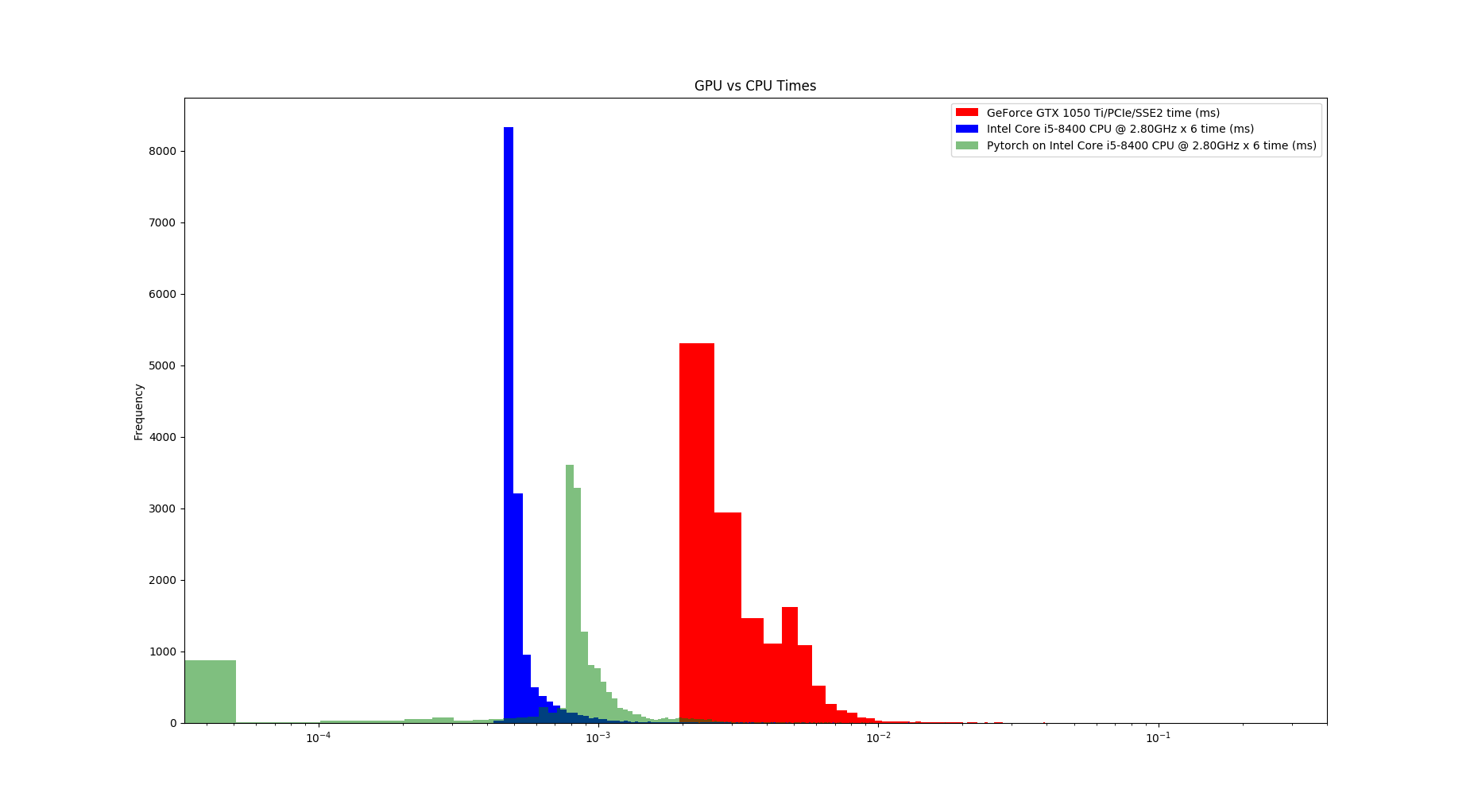

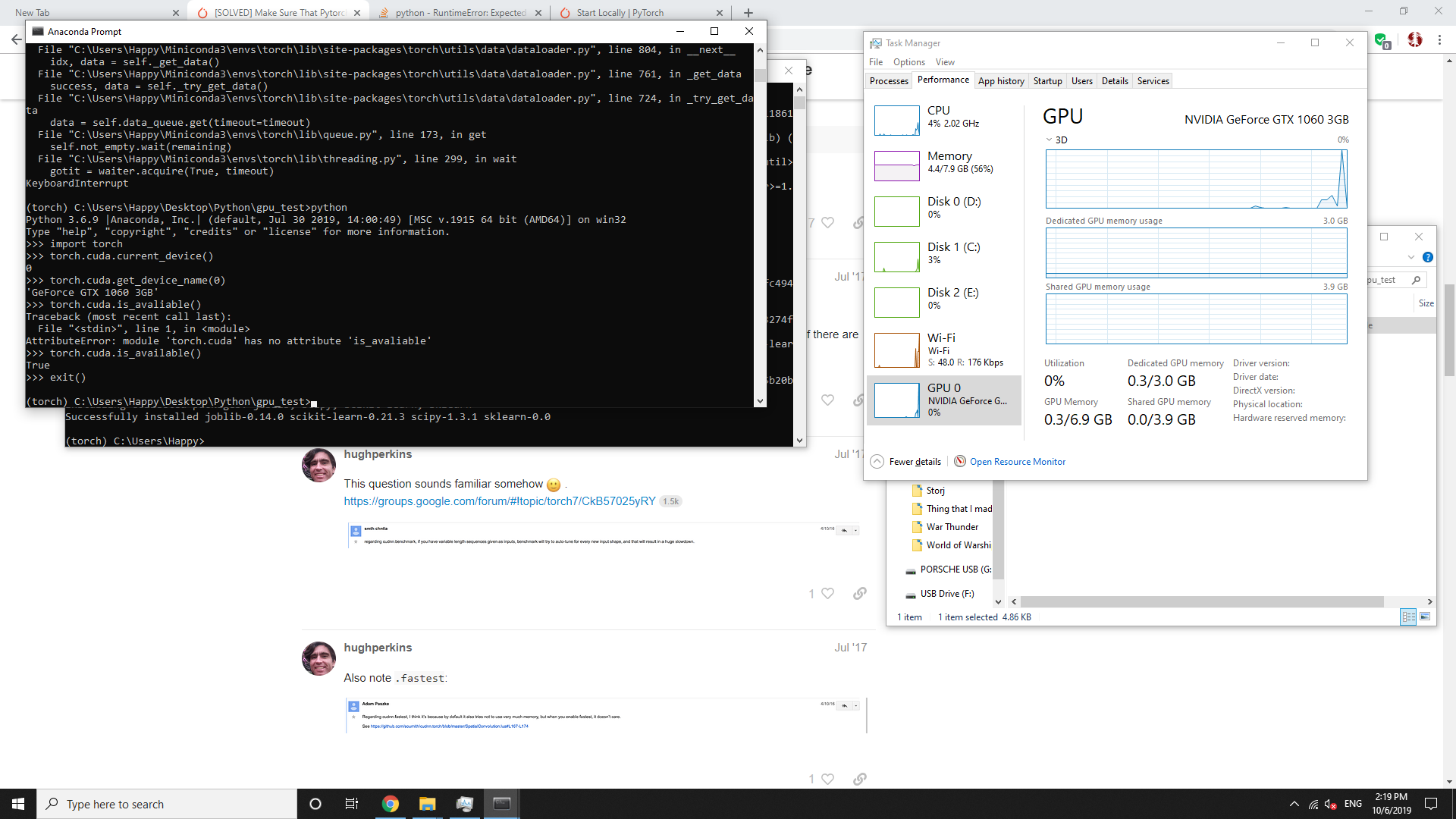

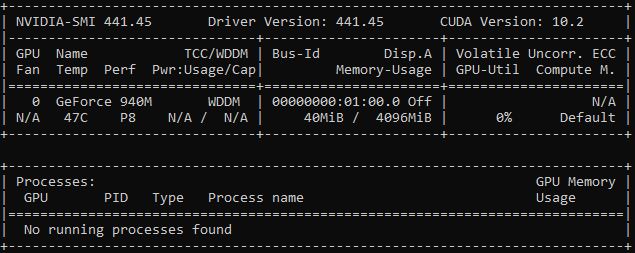

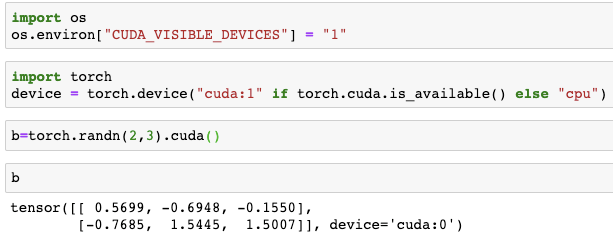

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

Roberto Lopez en LinkedIn: #tensorflow #pytorch #artificialintelligence #machinelearning… | 40 comentarios

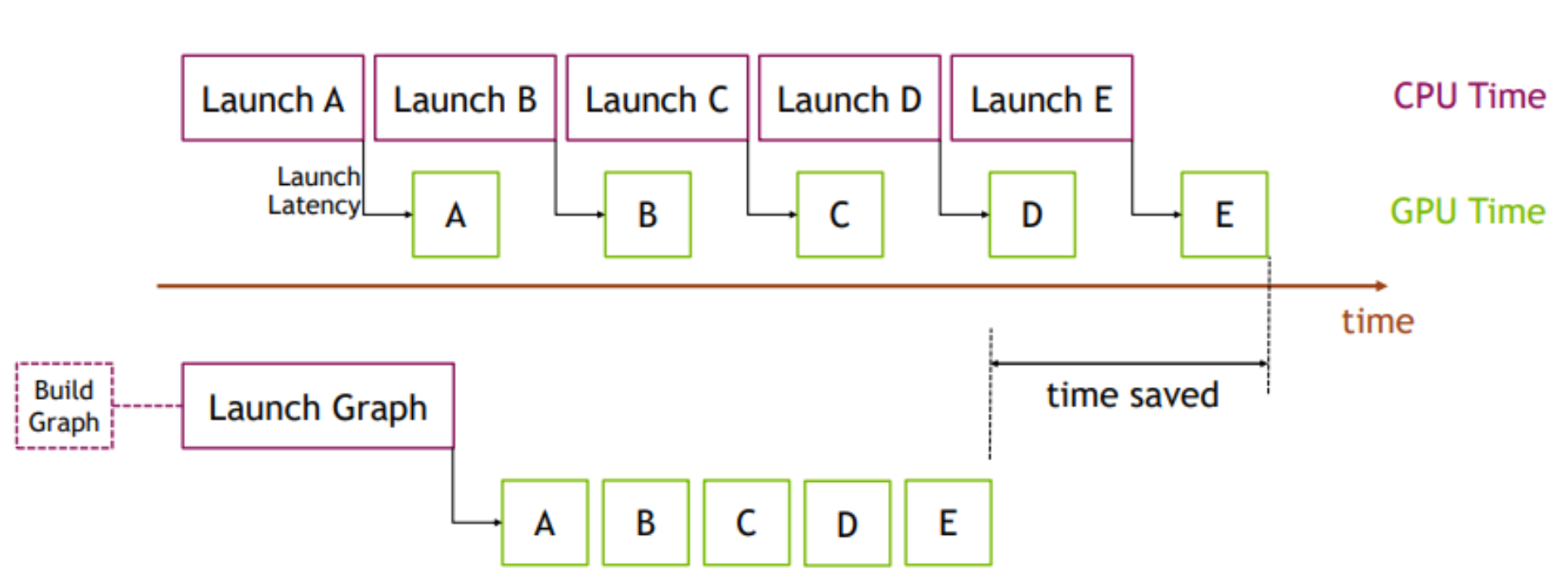

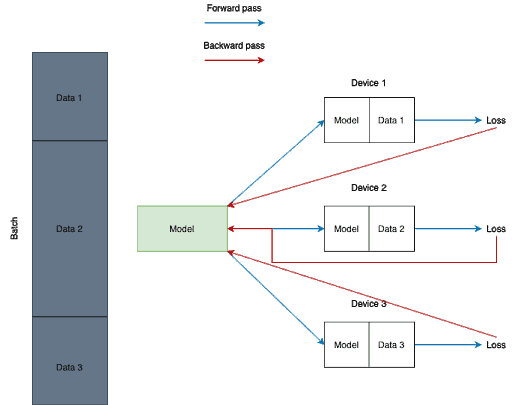

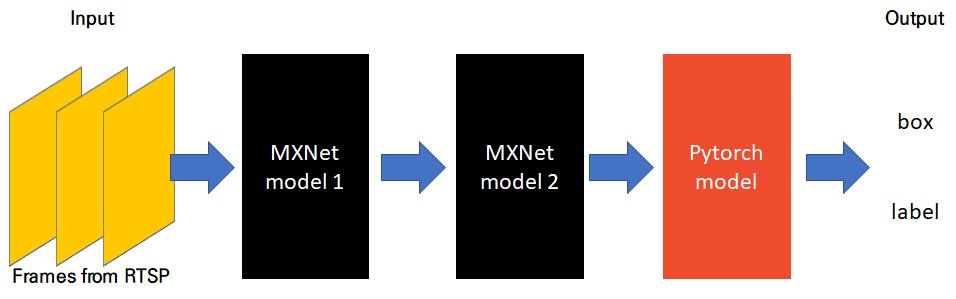

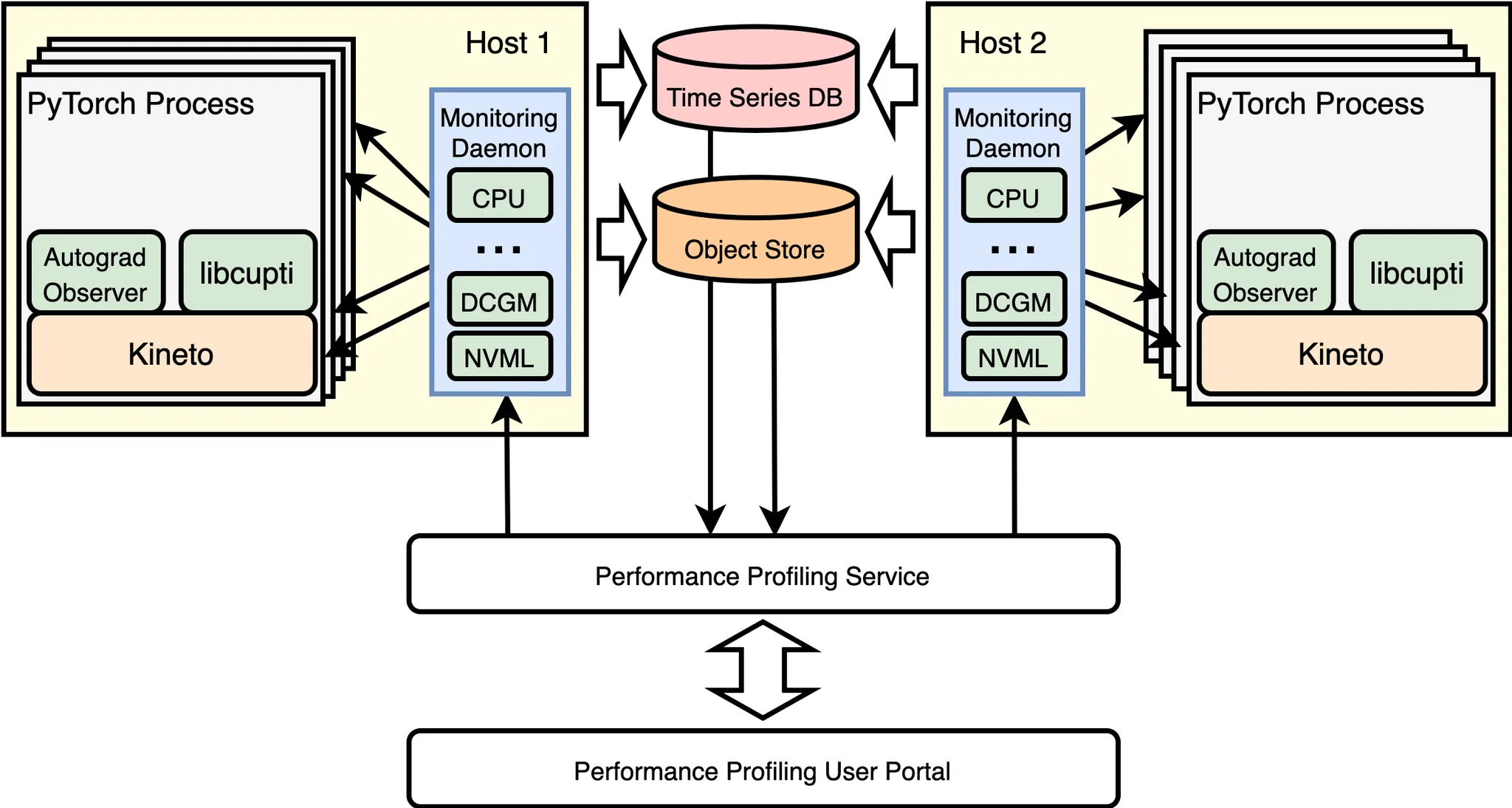

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

![D] My experience with running PyTorch on the M1 GPU : r/MachineLearning D] My experience with running PyTorch on the M1 GPU : r/MachineLearning](https://preview.redd.it/p8pbnptklf091.png?width=1035&format=png&auto=webp&s=26bb4a43f433b1cd983bb91c37b601b5b01c0318)